Enterprises integrating AI into their workflows must be aware of the unique security risks associated with using models like OpenAI. While OpenAI has implemented some measures to provide its users data security and privacy, understanding the key risks that enterprises open themselves up to when using OpenAI is crucial.

By taking a practical look at OpenAI data security risks, you can make informed decisions and begin to mitigate the risks involved in embracing this new technology. This article will provide you with the information you need to better understand OpenAI data security and make informed AI implementation decisions for your enterprise.

Why Does OpenAI Data Security Matter for Enterprises?

Seemingly every business from startups to global enterprises are looking for ways to embrace AI, lest they risk being left behind as this new wave of technology consumes the media and the world at large. But, enterprises, specifically, should be wary of integrating OpenAI into their tech stack without a proper risk assessment. Such an assessment can help to:

- Preserve confidentiality: Enterprises manage a range of sensitive data, from proprietary business details to payment card information and customer and employee PII. Mitigating the exposure of this data will help enterprises to maintain strict confidentiality policies.

- Assure regulatory compliance: Industries often operate under strict data protection regulations, like Europe's GDPR or California's CCPA. Understanding how OpenAI aligns with these data security standards is key for businesses to maintain compliance and avoid potential legal and financial penalties.

- Build trust: In today's digital landscape, data security significantly influences an enterprise's reputation. Enterprises considering integrating OpenAI into their tech stack need to understand the specific data security risks in order to maintain stakeholder trust.

OpenAI’s Position on Security & Data Privacy

What does OpenAI have to say about their proactive security measures and data privacy policies? Here are the highlights you should know:

- OpenAI is SOC 2 compliant

- The OpenAI API undergoes annual third-party penetration testing

- OpenAI states that they can help customers meet their regulatory, industry and contractual requirements, such as HIPAA

- OpenAI actively invites security researchers and ethical hackers to report security vulnerabilities via the Bug Bounty Program

Read more: OpenAI Docs - Security Policies

Top Security Risks for Enterprises Using OpenAI

Thoroughly evaluating the data security risks of using OpenAI in your enterprise may be a time consuming process, but it is a necessary step to protect sensitive information and ensure compliance with data protection regulations, such as the General Data Protection Regulation (GDPR). Let’s walk through the top security risks your enterprise should know.

ChatGPT conversation data can be used to retrain models

When enterprises engage with OpenAI's non-API consumer services, such as ChatGPT, it's important to note that the conversation data provided and/or generated by the model could be used to train and fine tune OpenAI's models. This means that all interaction data with these services is at risk of exposure. If you’re providing ChatGPT proprietary information, it could be exposed to other users if it’s used to train one of OpenAI’s models, like GPT-3. This is a serious risk for enterprises.

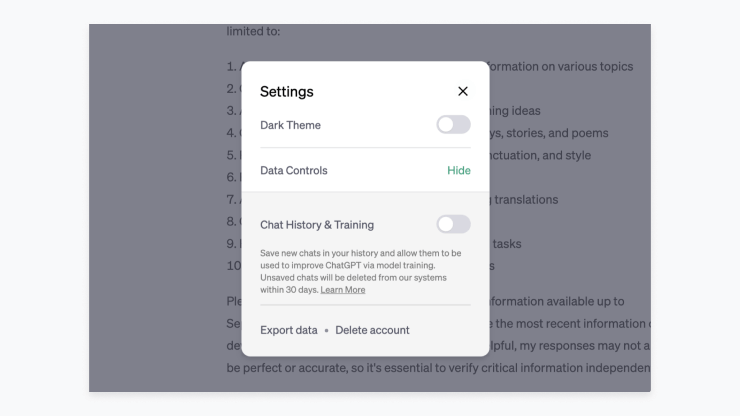

However, OpenAI does provide an avenue for mitigating this risk. Enterprises can formally request to opt out of having their interaction data used for model improvement. This can be done by completing a form provided by OpenAI, requiring the organization's ID, and the email address associated with the account owner. Or, if there is just one account that you want to disable data collection on, you can simply turn-off chat history in ChatGPT.

What else you need to know:

- Interaction data with non-API services like ChatGPT or DALL-E may be used to further train an OpenAI large language model.

- This potential usage exposes all conversation data to a degree of risk.

- OpenAI provides an opt-out process for enterprises that prefer their data not to be used in this way. You can submit a request to opt out here. However, it’s important to note while this can be managed at an enterprise account level, if employees use a personal account or are not logged in when using ChatGPT, any information they provide to ChatGPT will, by default, not be opted out.

Data sent through the OpenAI API could be exposed

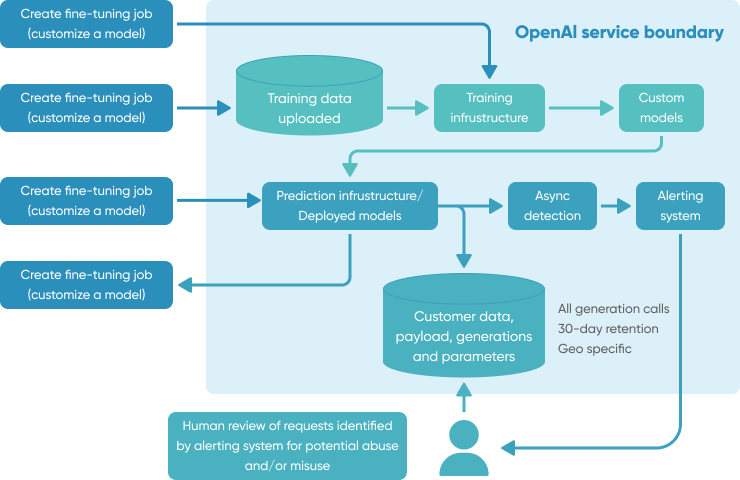

Data transmitted through OpenAI’s API is subject to certain exposure risks. Data sent via the API is stored by OpenAI for a duration of up to 30 days for the purpose of monitoring potential misuse or abuse. After 30 days, the data is generally deleted, except in cases where OpenAI is required by law to do so. This period of storage does present a window during which data could potentially be exposed to staff within OpenAI, external subprocessors, and even the public if a data leak occurs.

Additionally, any data submitted by users through the Files endpoint, such as for fine-tuning a customer’s model is kept until the user deletes it. This presents a serious data management burden and risk for enterprises.

Access to all of this data is permitted to an authorized group of OpenAI employees and certain third-party contractors, all of whom are bound by confidentiality and security obligations. Even with these precautions, the fact that data is stored for any amount of time poses a level of risk that enterprises should consider.

What you need to know:

- Data sent through the API is stored by OpenAI for up to 30 days for monitoring purposes, after which it's generally deleted unless the law dictates otherwise.

- User-submitted data via the Files endpoint is stored until the user decides to delete it, necessitating active data management.

- A limited number of authorized OpenAI employees and third-party contractors, bound by confidentiality and security obligations, can access this data.

Compliance with GDPR is uncertain

OpenAI's methodology, which involves gathering substantial amounts of online content for AI training, can present significant challenges in meeting GDPR requirements. A central concern regarding GDPR compliance is the potential use of personal data without explicit consent. This is an area that businesses must carefully consider when utilizing OpenAI's services. GDPR mandates that enterprises establish a legitimate interest or legal basis for processing personal data.

Failure to meet GDPR compliance requirements can have severe repercussions, such as substantial fines, mandated data deletion, or even bans across the European Union. Therefore, businesses considering the use of OpenAI should conduct a comprehensive assessment of the legal basis for data processing and implement the necessary safeguards to safeguard individuals' data privacy and security.

What you need to know:

- In early 2023, the Italian Data Protection Authority (GPDP) accused OpenAI of violating EU data protection. The case is ongoing.

- MIT Technology Review reports OpenAI faces challenges in complying with EU data protection laws due to its use of data to train its ChatGPT models.

- The legality of OpenAI’s processing of data is still under review in many countries around the world. Enterprises should carefully consider the risks of utilizing this service in order to maintain compliance with GDPR and avoid fines.

Data is exposed to third-party subprocessors

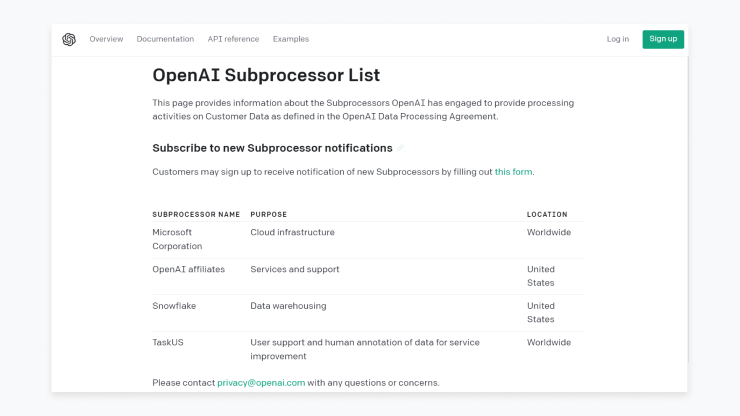

The use of sub processors in handling customer data represents another crucial aspect of data security and privacy for enterprises. OpenAI engages several subprocessors for various processing activities on Customer Data as stipulated in the OpenAI Data Processing Agreement.

Subprocessors like Microsoft Corporation and Snowflake are responsible for cloud infrastructure and data warehousing, respectively. Meanwhile, OpenAI affiliates and TaskUS provide services, support, and user support, with the latter also handling human annotation of data for service improvement. The worldwide locations of these subprocessors add another layer of complexity to the data security environment.

What you need to know:

- Subprocessors are engaged in various data processing activities, requiring careful consideration regarding their data handling practices.

- The global locations of subprocessors introduce the need for compliance with international data protection laws and regulations.

- OpenAI provides options for customers to receive notifications of new subprocessors.

- Evaluating the data processing agreement and subprocessor roles is crucial to understanding the full scope of data privacy and security when using OpenAI.

OpenAI models are not open for enterprises to evaluate

When enterprises incorporate AI models into their processes, understanding the underlying structure and training data of these models is often critical. However, OpenAI's models, despite the organization's name, are not open in the sense that their exact training data and intricate model details are not publicly available. This lack of transparency can be a concern for enterprises as it affects the certainty of the results received from the models.

Without precise knowledge of the training data, it's challenging for enterprises to evaluate the reliability or authenticity of the model's outputs. This can have significant implications, particularly in sensitive domains where decision-making is heavily reliant on AI input.

Moreover, the inability to scrutinize the model's structure and workings can make it difficult for enterprises to fully understand and manage the associated risks. This could potentially impact their risk assessment and governance policies around AI usage.

What you need to know:

- OpenAI's models are not "open" in terms of revealing their exact training data or intricate structural details.

- This lack of transparency can affect the certainty of the model's outputs, posing a potential risk for enterprises.

- Without precise knowledge of the training data or model structure, assessing the reliability or authenticity of the results becomes challenging.

- This lack of insight could also impact enterprises' risk assessment and AI governance strategies.

Ways to Mitigate Security Risks

As we have walked through the top data security and privacy concerns associated with OpenAI, it's clear that enterprises must prioritize safeguarding sensitive and confidential information when using AI applications. As organizations seek to mitigate potential risks, it's important to adopt proactive measures that bolster security.

In this section, we provide a list of practical strategies that can help you address these concerns and enhance the safety of your AI applications while using OpenAI:

Use Microsoft’s Azure OpenAI Service

Opting for Microsoft's Azure OpenAI service is a strategic choice for enterprises dealing with confidential information. Azure OpenAI is integrated with Microsoft's robust enterprise security and compliance controls, creating a more secure AI environment. Microsoft continuously works to enhance security and confidentiality policies for Azure OpenAI, mirroring the standards set for its other Azure services.

Read More: Microsoft’s Azure OpenAI Service

Follow OpenAI's security best practices

OpenAI offers a variety of strategies to improve security. If enterprises choose to utilize OpenAI services, they should carefully review these best practices and ensure proper safeguards and policies have been put in place internally to successfully align with these best practices.

Read More: OpenAI Docs - Safety Best Practices

Establish prompt content monitoring procedures

Implement a system to log every prompt sent to OpenAI, and schedule regular security team reviews of these logs. This proactive approach helps identify and address potential security concerns quickly.

AI monitoring tools to systematically monitor prompts, token usage, response times, and more:

- Helicone offers a targeted solution built explicitly for teams who want to track costs, usage, and latency for their GPT applications, all with just one line of code.

- Other tools like Datadog have built OpenAI integrations, which can allow teams to automatically track usage patterns, error rates, and more.

- If you’re building your own ML models, fiddler could be a good fit to provide comprehensive MLOps support.

Consider other AI Tools with enterprise-level security

Diversifying your AI toolbox with other tools that prioritize enterprise-grade security can provide additional layers of protection and mitigate potential security risks. There are many AI tools on the market that have been specifically designed with enterprises in mind that may be a better fit from a security and data protection perspective than OpenAI.

Read More: 6 Proven GPT-3 Open-Source Alternatives [2023 Comparison]

Don't share critical data, share only data structure information

Minimize risk by sharing only the necessary data structure information (such as table column names or database tables) with OpenAI. This enables OpenAI to build data queries that can be executed within your secure environment, without exposing sensitive data.

Conclusion - Is OpenAI Safe?

Enterprises considering the use of OpenAI should be aware of the data security risks involved. While OpenAI has implemented security measures such as SOC 2 compliance and bug bounty programs, concerns remain regarding the use of conversation data, potential exposure of data sent through the API, compliance with GDPR, and limited transparency into model details. Mitigating these risks requires careful evaluation, proactive measures, and consideration of alternative AI tools with stronger enterprise-level security and transparency.

If you're looking for help developing a custom enterprise AI application, consider our AI development services. Our team can help you put together a comprehensive AI strategy and guide you through the development process. With our software development consulting services, you can leverage the power of AI models to build a market-ready product that stands out from the competition.

![AWS Security Incident Response Plan [Practical Guide]](/uploads/aws-security-incident-response-plan.png)