New solution for an old problem

I am sure that you know perfectly well that data is a tricky beast to tame. In many cases, it is growing exponentially. Almost anything can be your data source that provides valuable information. All those sources and catalogs are becoming more diverse and increasingly harder to process.

If you have completed the 3rd step of the Data Flywheel strategy, which was related to building data-driven applications, you can be easily overwhelmed by the multitude of sources. Those applications themselves are continually bringing in new data on customer behaviors, patterns, and preferences.

It is easy and obvious to tackle such an issue with known techniques. The problem of data is not something that was invented in the past few years - we can benefit from many years of research and technological evolution in data warehouses space.

There is one problem with this approach. In today's world, static data solutions are insufficient to produce the analytics your business needs.

These stagnant data warehouses are usually:

- Siloed with data trapped in silos, seeing the big picture is nearly impossible.

- Delayed analytics based on yesterday's data arrives too late to gain a competitive advantage.

- Expensive when performing analytics costs more than the value of their insights, nobody wins.

- Cumbersome you might get an analytics platform running, but few will likely be able to use it efficiently.

- Limited to specialists making more data-driven decisions means democratizing analytics.

With all that in mind, how can your business possibly capture, store, and analyze its data at the near-real-time rate needed to remain competitive? To solve these issues and more, I would recommend taking a closer look at a data lake architecture.

What is a data lake?

In simple words, a data lake is a centralized repository that allows you to store all your structured and unstructured data at any scale. You can save your data as-is, without having first to structure the data and run different types of analytics — from dashboards and visualizations to big data processing, real-time analytics, and machine learning to guide better decisions.

Why do you need a data lake?

At first sight, it may look like an unnecessary hassle and a black hole for your data. Why would you like to store and combine both structured and unstructured data in a single place?

It turns out that organizations that successfully generate business value from their data are outperforming their peers. An Aberdeen survey saw organizations that implemented a Data Lake outperforming similar companies by 9% in organic revenue growth.

These leaders were able to do new types of analytics like machine learning over new sources like log files, data from click-streams, social media, and internet-connected devices stored in the data lake. This helped them to identify and act upon opportunities for business growth faster by attracting and retaining customers, boosting productivity, proactively maintaining devices, and making informed decisions.

As we have said above, such architecture allows you to store all your data once in a single place, in open formats that can then be analyzed by many types of analytics and machine learning services — faster and more efficiently than with traditional, siloed approaches.

By leveraging a data lake architecture, multiple groups within your organization can benefit from analyzing a consistent pool of data that spans the entire business. A data lake and analytics architecture breaks down walls and eliminates silos, allowing you to collect, process, store, and analyze all of your data more efficiently. With this architecture, you can derive more accurate and timely insights that drive smarter decisions, boost business outcomes, and give your Data Flywheel a further push toward self-sustaining momentum.

Let’s talk about concrete examples. Take, for instance, Zillow - they have built a data lake using Amazon S3 to store PBs of data. Uses analytics services from AWS — including Amazon EMR and Amazon Kinesis — to perform site personalization, advertising optimization, and build recommendations for over 100M homes.

Another great example is Finra which uses Amazon S3 as a data lake and Amazon EMR to look for fraud, market manipulation, insider trading, and abuse. They operate at a 60% lower cost than with its previous on-premises solutions according to the source.

Are you not convinced yet? Let’s analyze the Redfin use case where data lakes allowed to cost-effectively innovate and manage data on hundreds of millions of properties. Also, last but not least, Nasdaq, which optimized performance, availability, and security while loading seven billion rows of data per day.

How can AWS help you with that?

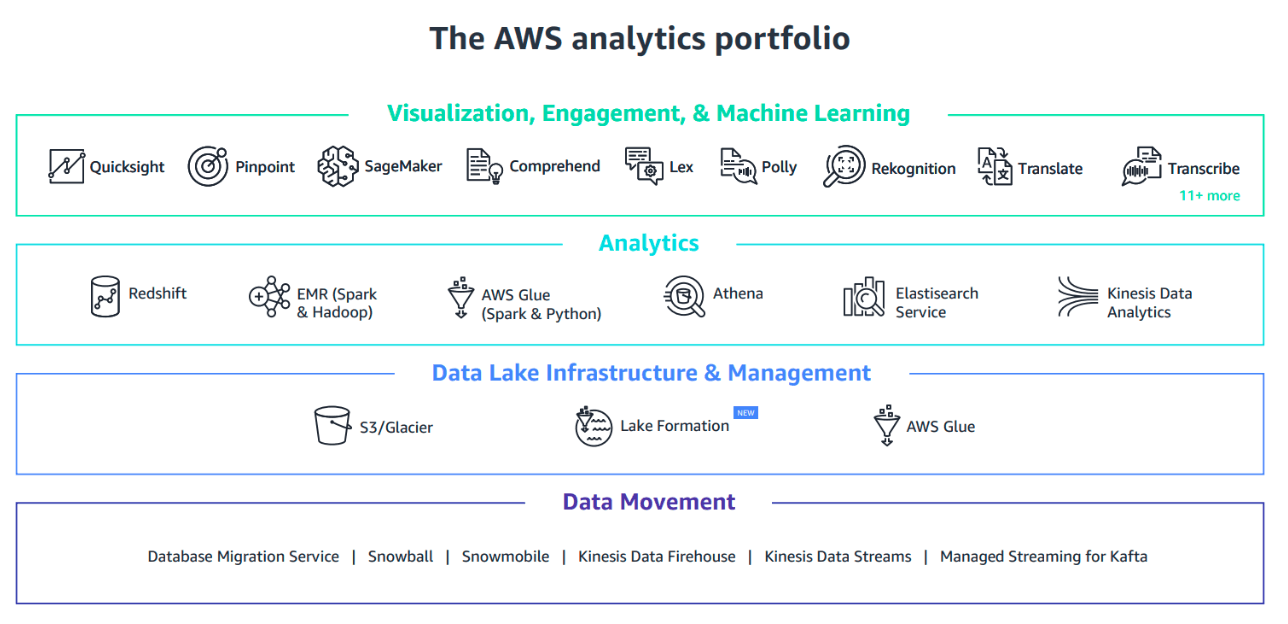

AWS provides the most comprehensive, secure, and cost-effective portfolio of services for every step of building a data lake and analytics architecture. These services include data migration, cloud infrastructure, management tools, analytics services, visualization tools, and machine learning.

Let’s analyze the available solutions.

Data Lake Setup and Maintenance

When it comes to the data lake setup, we have a dedicated, fully managed service called AWS Lake Formation. It allows you to build a secure data lake with just a few clicks. You define where your data resides and what policies you want to apply. AWS then collects, catalogs, and moves the data into your Amazon S3 data lake, cleans and classifies data using machine learning (ML) algorithms, and secures access to your sensitive data with the help of AWS Glue.

Interactive Analytics

Speaking about interactive analytics, the main workhorse of this section is Amazon Athena - a serverless interactive querying service. It analyzes data directly in Amazon S3 and Amazon Glacier using standard SQL queries. There’s no infrastructure to set up or manage — start querying information instantly, get results in seconds, and pay only for the queries you run.

Big Data Processing

I think there is no point in introducing probably one of the most known services available on the AWS platform - Amazon EMR. It processes vast amounts of data using the Apache Spark and Apache Hadoop frameworks — easily, quickly, and cost-effectively. This managed service supports 19 different open source projects, with managed EMR Notebooks for data engineering, data science development, and collaboration.

Data Warehousing

In that space, we also have available a fully-managed service - Amazon Redshift. It allows you to run complex analytic queries against petabytes of structured data — at less than a tenth of the cost of traditional solutions. Includes Redshift Spectrum, which runs SQL queries directly against exabytes of structured or unstructured data in Amazon S3, without the need for unnecessary data movement.

Real-time Analytics

Here we would like to focus on Amazon Kinesis. This solution collects, processes, and analyzes streaming data — such as IoT telemetry data, application logs, and website click-streams — as it arrives in your data lake. Thanks to that, you can respond in real-time instead of waiting until all your data is collected.

Operational Analytics

We should not forget about this vital area, which is represented by a fully-managed service called Amazon Elasticsearch Service. That allows for search, explore, filter, aggregate, and visualize your data in near real-time. It enables fast, simple application monitoring, log analytics, click-stream analytics, and more.

Dashboards and Visualizations

Thanks to Amazon QuickSight, you can quickly build stunning visualizations and rich dashboards that can be accessed from any browser or mobile device, without relying on 3rd party services.

Recommendations, Forecast, and Prediction

Here I would like to show three new services. First is Amazon Personalize that improves customer engagement with ML-powered product and content recommendations, tailored search results, and targeted marketing promotions. No prior ML training necessary — you provide an activity stream from your applications, and the solution will identify what is meaningful, select the right algorithms, and train and optimize a customized personalization model.

Second is Amazon Forecast, which similarly to the service mentioned above with no prior ML training required provides accurate time-series forecasting. Based on the same technology used at Amazon.com, it uses machine learning to combine time series data with additional variables to build forecasts.

Last, but not least, I would like to focus on Amazon SageMaker. This fully managed service allows for effortless building, training, and deployment of predictive ML models. By providing everything, you needed to connect to your training data, select and optimize the best algorithm and framework, and deploy your model on auto-scaling clusters of Amazon EC2.

Summary

Putting data to work is one of the biggest challenges of growing and established businesses. Your data should be an asset, not a liability. However, we know how hard it can be - especially if you are struggling with the infrastructure.

Any help that is provided by the cloud vendor is more than welcome because it will allow your company to focus on core business instead of increasing OpEx and building operational knowledge. Data lake architecture gives you a tremendous advantage, produces innovation drivers, and may provide information about unique selling points of your business hidden in the ocean data.

Why not trust a cloud provider in that area and focus on the innovation and building your core business? AWS by providing such a broad portfolio of services allows you to cover your needs by implementing data lakes that will offer unique opportunities in the foreseeable future.